26

The author perfected this article on January 15, 2020

Abstract

This article is mainly talking about Augmented Reality-AR technology. AR is a new technology for users' perception of the real world. It is generally believed that the emergence of AR technology stems from the development of Virtual Reality (VR), but there are obvious differences between the two. Traditional VR technology gives the user a completely immersive effect in the virtual world and creates another world. AR technology brings the computer into the user's real world and enhances it by listening, seeing, touching, and hearing the virtual information. The perception of the real world has achieved a transformation from "people adapt to machines" to "people-oriented".

What is Augmented Reality?

Catalog

Abstract | |

I AR Technology Principles | 1. Vision-Based AR |

2. LBS-Based AR | |

II Augmented Reality Technical Support | 1. Identification and Tracking Technology |

2. Reality Technology | |

3. Interaction Technology | |

III The Common Expression Ways of AR Technology | 1. Basic 3D Model |

2. Video | |

3. Transparent Video | |

4. Scene Display | |

5. AR Games | |

6. VR Combination | |

7. Large Screen Interaction | |

IV AR System Composition | 1. Monitor-based System |

2. Video See-through System | |

3. Optical See-through System | |

4. Comparison of the Performance of the Three System | |

V Book Suggestion | |

I AR Technology Principles

From its technical means and expression form, AR can be clearly divided into two categories. One is Vision based AR, which is based on computer vision, and the other is LBS based AR, which is based on geographical location information.

1. Vision-Based AR

Computer vision-based AR is using computer vision methods to establish the mapping relationship between the real world and the screen, so that the graphics or 3D model can be displayed on the screen as attached to the actual object. How to do this? Essentially speaking, find a plane of attachment in a real scene, map the plane under this 3D scene onto our 2-dimensional screen, and then draw the graphics you want to show on this plane. From the technical means, it can be divided into 2 categories:

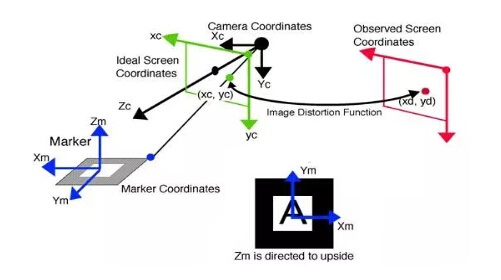

(1) Marker-Based AR

This method requires a pre-made Marker (for example, a template card or a two-dimensional code with a certain specification), and then places the Marker in a position in reality, which is equivalent to determining a plane in a real scene. , then recognize Marker and pose assessment through the camera, determine its location. The coordinate system which is originated by the Marker center is called Marker Coordinates, or the template coordinate system. What we want to do is actually to get A transformation to establish a mapping relationship between the template coordinate system and the screen coordinate system, so that we can achieve the effect of the graph attached to Marker according to the transformation of the graphics drawn on the screen. To understand the principle requires a little knowledge of 3D projective geometry, The transformation from the template coordinate system to the real screen coordinate system requires rotating and translating to the camera coordinate system (Camera Coordinates), and then reflecting from the camera coordinate system to the screen coordinate system.

Marker-Based AR

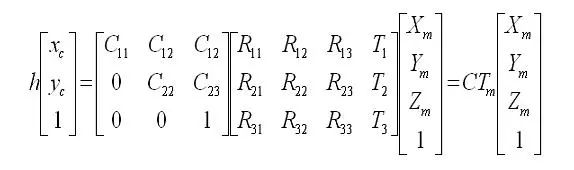

In the actual coding, all these transformations are a matrix. In the linear algebra, the matrix represents a transformation, and if the coordinates are left-shifted by the matrix, it is a linear transformation (for translation kind of nonlinear transformation, we can use homogeneous coordinates to perform the matrix. Operation). The formula is as follows:

Formula 1

The scientific name of the matrix C is called the camera internal reference matrix, and the matrix Tm is called the camera external reference matrix, where the internal reference matrix is obtained by camera calibration in advance, and the external reference matrix is unknown. we need to define it according to the screen coordinates (xc, yc) and beforehand. Good Marker coordinate system and internal parameter matrix to estimate Tm. Draw the graph according to Tm (the initial estimated Tm is not accurate enough, it also needs to use nonlinear least squares for iterative optimization), such as loading the Tm matrix in GL_MODELVIEW mode for graphical display when drawing with OpenGL.

(2) Marker-Less AR

The basic principle is the same as that of Marker based AR, but it can use any object with sufficient feature points (for example, the cover of the book) as a plane datum, without the necessary to make special templates in advance, and get rid of the binding of the template to AR applications. Its principle is to extract feature points from template objects through a series of algorithms (such as SURF, ORB, FERN, etc.), and record or learn these feature points. When the camera scans the surrounding scenes, the feature points of the surrounding scenes are extracted and compared with the feature points of the recorded template objects. If the number of scanned feature points and template feature points exceeds a threshold, the template is considered to be scanned, and then The coordinates of the corresponding feature points are used to estimate the Tm matrix, and then the graph is plotted according to Tm (similar to Marker-Based AR).

2. LBS-Based AR

The basic principle is to obtain the geographical location of the user through GPS, obtain the POI information of objects near the location (such as restaurants, banks, schools, etc.) from some data sources (such as wiki, google), and then through the electronic compass and acceleration sensor of the mobile device to acquire the direction and tilt angle of the user's hand-held device. By the information that establishes the plane reference (equivalent to the marker) of the target object in the real scene through the information, and the principle of the coordinate transformation and display is similar to that of the Marker-Based AR.

This AR technology is implemented by using the device's GPS functions and sensors. It repels the application's reliance on Marker. The user experience is better than Marker-Based AR. Because it does not need to recognize the Marker attitude and calculate the feature points in real time, and performs better than Marker-Based AR and Marker-Less AR, LBS-Based AR can be better applied to mobile devices compared to Marker-Based AR and Marker-Less AR.

Marker-Less AR

II Augmented Reality Technical Support

1. Identification and Tracking Technology

In the process of realizing augmented reality, real scenes and information need to be analyzed to generate virtual thing information. These two steps seem to be simple. In fact, in the actual process, the video stream of the real scene obtained by the camera needs to be converted into a digital image, and then the pre-set markers are identified through the image processing technology.

Identification and Tracking Technology

After the marker is identified which is used as a reference, combined with positioning techniques, the augmented reality program determines the position and orientation of the 3D virtual object to be added in the augmented reality environment, and determines the orientation of the digital template. Match the logo in the logo with the preset digital template image to determine the basic information of the 3D virtual object to be added. Create a virtual object and use the program to place the virtual object in the correct location based on the location of the identified object. One of the biggest challenges in augmenting reality is the identification tracking and positioning issues involved.

To achieve the perfect combination of virtual and real things, we must determine the exact location and accurate direction of virtual objects in the real environment, otherwise the effect of augmented reality will be greatly reduced. In the real environment, due to the imperfection of the real environment, or the so-called complexity, the effect of the augmented reality system in this environment is far less than in the ideal environment of the laboratory. Due to the problems of occlusion, non-focusing, non-uniform illumination, and fast object movement in real-world environments, it poses a challenge to the augmented reality tracking and positioning system.

If you do not consider devices that interact with augmented reality, there are two main ways to implement tracking and positioning:

(1) Image Detection

Image detection is using pattern recognition techniques (including template matching, edge detection, etc.) to identify the pre-set markers in the acquired digital image, or the reference points, contours, and then calculate the transformation matrix to determine the location and direction of virtual object based on its offset distance and deflection angle.

Image Detection

This method does not need other equipment for tracking and positioning, and it has high accuracy. Therefore, it is the most common positioning method in augmented reality technology. When the template is matched, the system stores a variety of templates in advance to match the detected markers in the image to calculate the position. Simple template matching can improve the efficiency of image detection, and it also provides guarantee for real-time augmented reality. By calculating the deflection and deflection of the markers in the image, it is also possible to observe all three-dimensional virtual objects. Template matching is generally used to correspond to a specific image in three dimensions. The device can present a three-dimensional virtual model by scanning a specific image and matching the special flag bits in these images with a pre-stored template. For example, car model cards and toy company character cards can use template matching to augment reality. Edge detection can detect some parts of the human body. It can also track the movement of these parts, and seamlessly fusing it with the virtual object. For example, if the virtual hand is a virtual object, the camera can adjust the position of the virtual object by tracking the outline of the user's hand and the way of movement. Therefore, many virtual shopping malls are practical and use edge detection.

Although the graphic detection method is simple and efficient, it also has its shortcomings. Image detection is mostly used in relatively ideal environments and close-range environments, so that the video stream and image information obtained will be clear and easy to perform positioning calculations. However, in the outdoor environment, the light and shade of light, the obstruction of the object, and the focusing problem make the augmented reality system unable to recognize the markers in the image or the images similar to the markers, which will affect the augmented reality. Effect. At this time, other tracking and positioning methods are needed.

(2) Global Positioning System

This method is based on detailed GPS information to track and determine the user's geographic location information. When the user walks in the real environment, these positioning information and the direction of the user's camera can be used to make mistakes. The augmented reality system can accurately transfer virtual information and virtual objects to the environment and the surrounding people. At present, due to the popularity of smart devices, smart phones are widely used. Because smart phones have the basic components of an augmented reality system that supports GPS-based positioning methods: cameras, display screens, GPS functions, information processors, digital compasses, etc. They are integrated, so this tracking method is mostly used on such smart mobile devices. An application called Augmented Reality Browser is mainly an application of this method. The augmented reality browser can run on a smart phone. It can connect to the Internet, search for relevant information, and then let the user see the relevant information in the real environment. Augmented reality browsers allow users to learn about virtually everything in the direction of the camera, such as finding a restaurant that is close by but blocked, or getting a user's opinion of a cafe.

Global Positioning System

This positioning method is suitable for outdoor tracking and positioning, can overcome the effects of image detection methods caused by uncertainties in the outdoor environment, light, and focus.

In fact, in the context of actual application of augmented reality systems, a single positioning method is often not used for orientation and positioning. For example, augmented reality browsers also use image detection methods to detect certain symbols, such as QR codes. Recognizing the QR code for template matching can provide users with information.

2. Reality Technology

The current augmented reality mainly has the following three display technologies: (1) Mobile handheld display. (2)video space display and space enhancement display. > >>(3)wearable display

The smartphone captures the live view through the corresponding software and displays the superimposed digital image. This is the general operation of the mobile handheld display. At the same time, nowadays, tablet PCs have continuously increased their functions and larger screens than smart phones, which is also increasingly popular.

Reality Technology

Holding the augmented reality marker, the virtual overlay image is displayed on the food window or the display through the network camera, which is in the video space display mode. Greeting cards with augmented reality are displayed in this way. After receiving the greeting card, the user logs in to the corresponding website system and uses the network camera to aim at the greeting card. The user can obtain virtual objects and videos formed from the information stored in the greeting card from the display screen. Space-enhanced display technology uses video projection technology including holographic projection to directly display virtual digital information in real environments. The system of this technology is different from the general augmented reality system. It is only suitable for personal use, but it can be combined with augmented reality and the surrounding environment. It is not limited to a single user. This technology is applicable to universities or libraries and can provide augmented reality information for a group of people at the same time. It is also possible to project the control component onto the corresponding solid model to facilitate the interactive operation of the engineer.

A wearable display is a glasses-like helmet display that can be worn on the user's head. We are familiar with and look forward to Google Glass type. Wearable displays generally have a small display with two embedded lenses and semi-transparent mirrors, which are widely used in flight simulation, engineering design, and education and training. The head-mounted device allows the user to experience augmented reality more naturally, and can provide the user with a larger field of view, giving the user a stronger and more realistic feeling of being in the place.

3. Interaction Technology

The most basic augmented reality human-computer interaction is the user viewing virtual data. In addition, there are some interactive technologies

Interaction Technology

(1) Tactile Interaction

Through digital information to provide body touch to further realize the virtual reality. For example, a touchable virtual ball of light can be a phantom pen that can be painted on a virtual bowl.

(2) Cooperative Interaction

Use multiple monitors to support remote sharing and interaction or collaborative activities in the same place. This interaction can be integrated with a variety of application software and can be used to perform diagnostics and surgical operations in the medical field, or equipment maintenance.

(3) Hybrid Interaction

Combining a variety of different but functionally complementary interfaces allows users to interact through multiple ways of augmented reality content. This interaction makes augmented reality interactions more flexible and can be used for digital model testing.

(4) Multimodal Interaction

Interact with real objects through natural forms of language and behavior, such as speaking, touching, natural gestures, gaze, etc. Multimodal interaction allows users to flexibly combine multiple modalities, making it easier for users to interact with augmented reality systems.

III The Common Expression Ways of AR Technology

1. Basic 3D Model

The 3D model (static or dynamic) is the most basic form of AR technology, such as animation characters, architecture, exhibits, and furniture. At present, the domestic AR industry is in the early stage of development. The 3D model representation is mainly applied to AR primary mobile app products. Although this mode of realization is the most basic, it is also the product with the widest application scenario, the lowest development cost, and the best market popularity.

Basic 3D Model

2. Video

Compared to a simple 3D model, a cool video display is undoubtedly more eye-catching. In business operations, the economic benefits of this display method will be better. For example, if it is an ordinary product installation instruction, menu explanation, and leaflet introduction, once AR technology is applied, then it is no longer a flat image, but a three-dimensional image, and the expression becomes accurate and vivid. A magical feeling. In similar scenario applications, AR technology has a huge market space for mining and expansion. Here, it needs to be reminded that the use of AR technology to achieve video playback is not difficult, the difficulty is to create a suitable for the AR scene to play the video, which requires you to open the brain hole, carefully carved.

3. Transparent Video

The first time I saw it, I felt it was more like a 3D character model using Ultra HD, but strictly speaking, it was the effect of a transparent video display with special handling. This video does not have the high cost of a 3D model, but it has a realistic deduction effect. If you design in large posters, brochures, shopping malls and other scenes, there will be great results.

4. Scene Display

It is not simply a simple 3D model plus even if it is done. Although the scene representation is similar to the basic 3D model overlay, it is much more complicated to implement than a single 3D model, and the scene contains more and wider application scope. For example, entertainment, three-dimensional reading, games and other applications will need to show the scene, of course, the construction of such scenes need content support. The AR scenario is different from the full scenario of the VR construction. The AR scenario presentation is based on reality and is intertwined with reality. This is exactly the fascinating aspect of the AR technology.

Scene Display

5. AR Games

AR technology also brings great innovations in the game approach. Current "Pokemon Go", "Little Dragon Sparrow" and "Magical Reality New Hero Card" are all very good AR games. Imagine that games in the future no longer require complex scene modeling, but rather play in the real world. At the same time, in the real world, many virtual superimposed things can appear. This is a great experience! The game can also get rid of the shackles of space and space, you can start anytime, anywhere.

6. VR Combination

Together, AR and VR technologies enrich our real world. AR technology aims to enhance the content of the world we live in. VR technology shifts our attention from reality to a virtual space. If we combine AR and VR, we believe that there will be a better experience. For example, with VR device and AR display, you don't need guides or narrators. With VR, the enhanced information returned by AR will appear in real time. Next to what you are focusing on, tell you what it is and even tell you more about it. Future integration like this can also be reflected in navigation, medical and other fields.

7. Large Screen Interaction

As an extension of AR technology display, large-screen interactions are also very surprising. They are mainly used in shopping malls, museums, experience centers, and large-scale events (concerts). Large-screen interaction, in simple terms, is AR technology plus projection, creating a more realistic, shocking scene and atmosphere.

Large Screen Interaction

IV AR System Composition

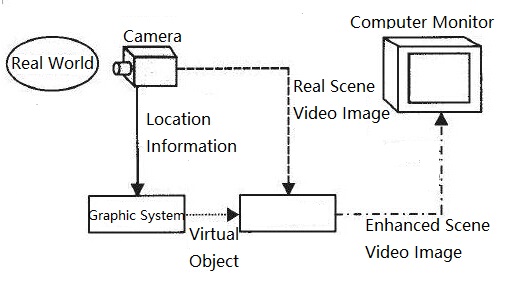

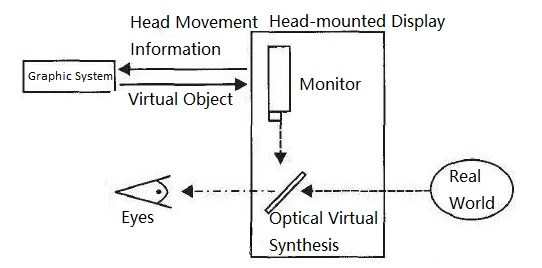

1. Monitor-based System

In a computer display-based AR implementation, a real-world image captured by a camera is input into a computer, and synthesized with a virtual scene generated by a computer graphics system and output to a screen display. The user sees the final enhanced scene picture from the screen. Although it does not give the user much immersion, it is the simplest implementation of an AR implementation. Because of the low hardware requirements of this solution, it was widely adopted by AR system researchers in the laboratory.

Monitor-based System

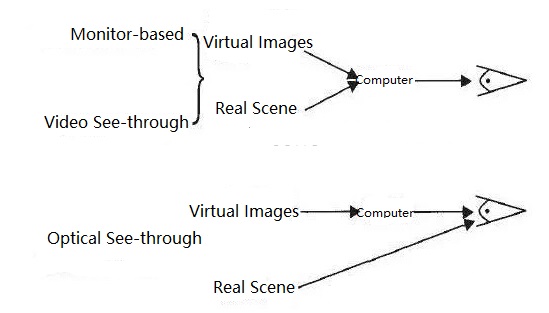

2. Video See-through System

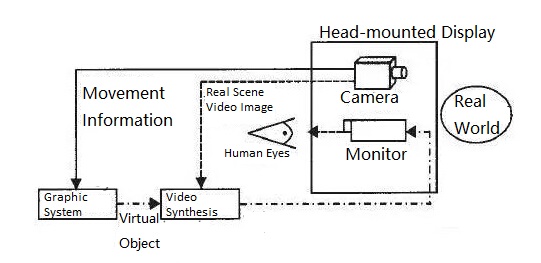

Head-mounted displays (HMDs) are widely used in virtual reality systems to enhance the user's visual immersion. Augmented Reality researchers have also adopted similar display technologies. This is the penetration HMD widely used in AR. According to the specific implementation principle, they are divided into two major categories. They are the video see-through HMD based on video synthesis technology and the optical see-through HMD based on the optical principle.

Video See-through System

3. Optical See-through System

In the above two sets of system implementation solutions, there are two channels of information in the input computer, one is a computer-generated virtual information channel, and the other is a real scene channel from the camera. In the optical see-through HMD implementation scheme, the latter is located in the latter. The image of the real scene directly enters the human eye after a certain amount of light reduction processing, and the information of the virtual channel enters the human eye after being reflected by the projection, and both optically. Methods for synthesis.

Optical See-through System

4. Comparison of the Performance of the Three System

The three AR display technology implementation strategies have their advantages and disadvantages in terms of performance. In the AR implementation based on monitor-based and video see-through display technologies, images of real scenes are captured by cameras, and the combination of virtual and real images is completed and output in a computer. The entire process inevitably has a certain amount of system delay, which is a major cause of false registration errors in dynamic AR applications. However, since the user's vision is completely under the control of the computer, this kind of system delay can be compensated through the coordination of the two virtual channels inside the computer. In the AR implementation based on the optical see-through display technology, the video transmission of the real scene is real-time and is not controlled by the computer, so it is impossible to compensate the system delay by controlling the video display rate.

Comparison of the Performance

In addition, in the AR implementation based on the monitor-based and video See-through display technology, the input video image may be analyzed by a computer, and tracking information (reference points or image features) may be extracted from the image information of the real scene to assist the dynamic AR. The process of registration of virtual scenes. In the AR implementation based on the optical see-through display technology, only the helmet position sensor can be used to assist virtual reality registration.

V Book Suggestion

1. Practical Augmented Reality

This is the most comprehensive and up-to-date guide to the technologies, applications and human factors considerations of Augmented Reality (AR) and Virtual Reality (VR) systems and wearable computing devices. Ideal for practitioners and students alike, it brings together comprehensive coverage of both theory and practice, emphasizing leading-edge displays, sensors, and other enabling technologies and tools that are already commercially available or will be soon.

--by Steve Aukstakalnis

2. Augmented Reality for Developers

The book opens with an introduction to Augmented Reality, including markets, technologies, and development tools. You will begin by setting up your development machine for Android, iOS, and Windows development, learning the basics of using Unity and the Vuforia AR platform as well as the open source ARToolKit and Microsoft Mixed Reality Toolkit.

--by Jonathan Linowes, Krystian Babilinski

You May Also Like:

Why do Internet Giants Want to Self-develop Chips?

Ordering & Quality

| Photo | Mfr. Part # | Company | Description | Package | Qty |

|

BAT48 | Company:STMicroelectronics | Remark:DIODE SCHOTTKY 40V 350MA DO35 | Package:DO-204AH, DO-35, Axial |

BAT48 Datasheet |

In Stock:55560 Inquiry |

Inquiry |

|

IS42S32400F-7TL | Company:ISSI | Remark:IC SDRAM 128M 143MHZ 86TSOP | Package:86-TFSOP (0.400", 10.16mm Width) |

IS42S32400F-7TL Datasheet |

In Stock:1104 Inquiry |

Inquiry |

|

CY62157DV30LL-55ZSXI | Company:Cypress Semiconductor Corp | Remark:IC SRAM 8MBIT 55NS 44TSOP | Package:44-TSOP (0.400", 10.16mm Width) |

CY62157DV30LL-55ZSXI Datasheet |

In Stock:1200 Inquiry |

Inquiry |

|

MPC8544CVTALFA | Company:NXP / Freescale | Remark:IC MPU MPC85XX 667MHZ 783FCBGA | Package:BGA |

MPC8544CVTALFA Datasheet |

In Stock:69 Inquiry |

Inquiry |

|

73M2R010F | Company:CTS Electronic Components | Remark:RES SMD 0.01 OHM 1% 2W 2512 | Package:2512 J-Lead |

73M2R010F Datasheet |

In Stock:1263 Inquiry |

Inquiry |

|

XC3S50A-4VQG100C | Company:Xilinx | Remark:IC FPGA 68 I/O 100VQFP | Package:100-TQFP |

XC3S50A-4VQG100C Datasheet |

In Stock:8618 Inquiry |

Inquiry |

|

DS1818-10 | Company:Maxim Integrated | Remark:IC 2.88V W/PB 10% TO92-3 | Package:TO-226-3, TO-92-3 (TO-226AA) |

DS1818-10 Datasheet |

In Stock:1150 Inquiry |

Inquiry |

|

MBRD340G | Company:ON Semiconductor | Remark:DIODE SCHOTTKY 40V 3A DPAK | Package:TO-252-3, DPak (2 Leads + Tab), SC-63 |

MBRD340G Datasheet |

In Stock:604 Inquiry |

Inquiry |

|

MCF5206ECAB40 | Company:Freescale Semiconductor - NXP | Remark:IC MCU 32BIT 160QFP | Package:QFP160 |

MCF5206ECAB40 Datasheet |

In Stock:1276 Inquiry |

Inquiry |

|

Z8F042ASB020EG | Company:ZiLOG | Remark:IC MCU 8BIT 4KB FLASH 8SOIC | Package:8-SOIC (0.154", 3.90mm Width) |

Z8F042ASB020EG Datasheet |

In Stock:802 Inquiry |

Inquiry |